The “Data Leak” Trap

Imagine a time-pressed CTO pasting a confidential RFP into a generative AI tool for quick analysis, receiving the insights needed, and moving on. Months later, a competitor’s AI produces a response that mirrors the original pricing strategy almost word for word. There was no system breach and no external attack. A casual use of a standard subscription and unsafe data-handling habits resulted in a Data leak.

In 2026, enterprise AI adoption is exploding yet 40% of enterprises could face data leaks from shadow AI by 2030 reports a study by Gartner . The most immediate risk is a developer with a “Pro” subscription and a copy-paste habit.

This scenario isn’t hypothetical. It’s happening now, eroding trust and inviting regulators. Lets look at how to lock this down.

Risks of Public LLMs

Public models like standard ChatGPT, Claude 3.5, or Gemini excel at brainstorming, rapid prototyping and casual queries. But they often treat user prompts as “contribution data” to improve future iterations unless explicitly opted out. Your proprietary code, secret project roadmap, customer PII or M&A strategies could theoretically surface in a competitor’s prompt response months later, repackaged as “helpful context.”

In 2023, Samsung banned the use of ChatGPT and similar tools on its company systems

Samsung became a cautionary example of how sensitive data can leak through public LLMs when engineers, in its semiconductor division , reportedly pasted proprietary source code and internal documents into the public AI tool to debug issues and summarize content, unintentionally exposing highly confidential information outside the company’s control.

This came as a critical lesson for enterprises:

When confidential data is shared with public LLMs, the risk comes not from hackers, but from convenience-driven misuse and the lack of clear AI governance.

Safety of Enterprise/Private LLMs

Contrast this with enterprise-grade options like Azure OpenAI, OpenAI Enterprise, Amazon Bedrock, or Google Vertex AI. These provide contractual guarantees that your data is never used for training and remains isolated within your tenant. Azure OpenAI, for instance, runs on your VNet with private endpoints; OpenAI Enterprise offers SOC 2 Type II and zero-retention policies.

The benefits scale significantly, enabling fine-tuning on proprietary datasets without the risk of cross-pollution, maintaining detailed audit logs to support compliance requirements such as HIPAA and FedRAMP, and implementing role-based access control (RBAC) that aligns system access with Entra ID groups.

Common Developer Pitfalls

-

- Hardcoding a public API key into an internal Streamlit app, Jupyter notebook, or GitHub repo.

- Or failing to enable “Opt-out of training” toggles in personal ChatGPT Pro accounts used for work.

- One unchecked browser tab can expose gigabytes of IP via screenshot uploads or prompt chaining.

- LangChain/LlamaIndex agents pulling from unsanitized RAG stores, or CI/CD pipelines testing against public endpoints.

By 2026, with 80% of enterprises deploying AI agents, misconfigurations multiple exponentially

-

- What starts as a solo dev’s shortcut scales catastrophically. NIST 800-218 flags this as “supply chain risk” and SOC 2 auditors demand proof of LLM governance. Regulators like EU AI Act (2026 enforcement) classify high-risk systems, mandating data lineage tracking.

- 70% of CISOs underestimate shadow AI (SANS 2025).

How to Avoid the Trap

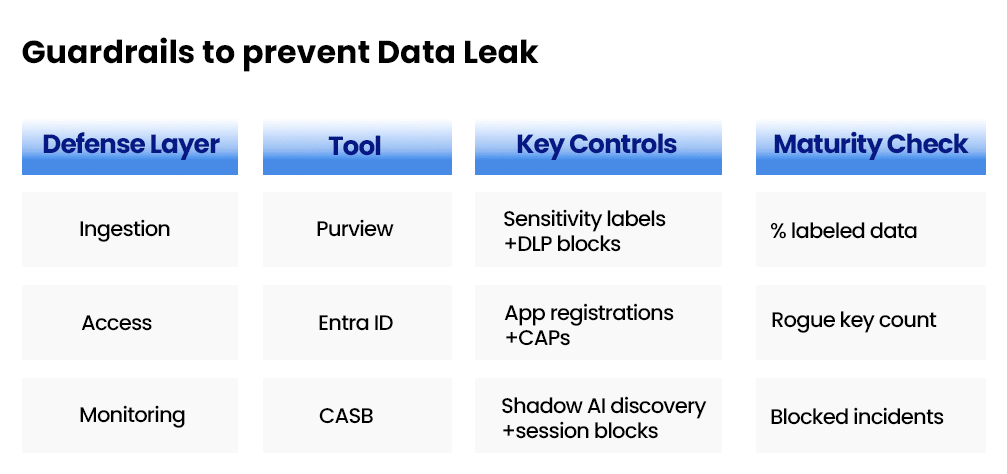

Layered defenses enforce zero-trust without killing velocity. It is better to start small and scale fast.

Zero-Trust Ingestion

Organizations can use tools such as Microsoft Purview or Collibra to apply sensitivity labels like “Highly Confidential” or “Internal Eyes Only” to critical data. Once labeled, Data Loss Prevention (DLP) policies help prevent these files from being uploaded to non-sanctioned domains or unauthorized platforms. Endpoint protection solutions, such as Microsoft Defender for Endpoint, further enforce these controls at the client level, while proxy filters provide an additional safeguard by detecting and blocking risky uploads to SaaS applications.

Pro tip: Integrate with GitHub Copilot policies where we label repos and auto-block public model calls in PRs.

Azure AD (Entra ID) Guardrails

All AI applications should be registered in Entra ID with carefully defined scopes to ensure precise access control. Permissions must follow the principle of least privilege, for example, granting Calendars.Read instead of broader access such as Mail.Read and these should be managed through app registrations rather than user delegation. Conditional Access Policies (CAPs) further strengthen security by blocking access from public IP addresses, requiring multi-factor authentication, and enforcing device compliance.

In multi-tenant environments, Cross-Tenant Access Settings can be used to isolate vendors and prevent unintended data exposure. Additionally, sign-in logs should be audited on a weekly basis by filtering for user principal names associated with services such as “Microsoft Copilot” or “OpenAI Chat,” helping organizations maintain visibility and quickly detect any unauthorized or risky activity.

Shadow AI Discovery

Organizations should deploy Cloud Access Security Brokers (CASBs) such as Microsoft Defender for Cloud Apps, Zscaler, or Netskope to gain visibility into AI usage across their environment. These tools help identify unsanctioned data flows, including ChatGPT logins from corporate VPNs or API calls to external platforms like Claude. Once detected, security teams can block, alert, or sandbox risky sessions to reduce potential exposure.

For more advanced monitoring, User and Entity Behavior Analytics (UEBA) can be used to profile “AI power users” and flag unusual spikes in prompt activity or access patterns. When combined with a Security Information and Event Management (SIEM) solution such as Microsoft Sentinel, these insights can be correlated to automatically trigger incident tickets. For example, when a high-privilege user interacts with a public LLM. In practice, CASB solutions are highly effective at uncovering shadow AI activity, often identifying the majority of risks before they escalate into a breach.

Take Control Now

Protecting your organization from unintended data exposure through public LLMs begins with swift, practical steps. Start by auditing your environment, scan Entra ID for rogue API keys using tools like Microsoft 365 Defender and review sign-in logs to identify any interactions with external AI services. Early visibility is key to uncovering hidden risks.

Next, establish foundational guardrails by implementing sensitivity labeling with Microsoft Purview and piloting a CASB solution. These controls help monitor data movement and prevent unauthorized sharing. Finally, scale responsibly by training teams on safe AI usage and measuring the impact through reductions in Data Loss Prevention (DLP) incidents. In a rapidly evolving AI landscape, timely action enables organizations to innovate confidently while keeping critical data secure.

AUTHOR

Ramesh Karthikeyan

Ramesh Karthikeyan is a results-driven Solution Architect skilled in designing and delivering enterprise applications using Microsoft and cloud technologies. He excels in translating business needs into scalable technical solutions with strong leadership and client collaboration.