Every executive inbox, on any given day, contains a pitch for AI which all looks the same. The conversation has officially shifted from ‘if’ to ‘how’ to scale Artificial Intelligence across the enterprise. This pressure has fueled a fierce branding war among consulting giants, who are now aggressively trademarking sophisticated frameworks and platforms, not necessarily because they are the best solution, but because they are the best product to sell. This misalignment is creating the biggest risk in enterprise AI adoption today!

From Agentic Theater to Business Reality

The core problem lies in the shift from problem-first consulting to product-first selling, where firms aggressively market their proprietary Agentic AI frameworks as mandatory solutions rather than optional tools. This creates the “Agentic AI Scam,” causing enterprises to invest millions in over-engineered platforms that are ill-suited to their simple needs, resulting in fragile, high-maintenance pilots and marginal ROI because the incentive structure forces consultants to find use cases for their expensive platform instead of delivering the most direct, minimal, and profitable business solution.

Agentic AI is a powerful tool for the right kind of problem, not a universal organizing principle. If an agentic stack does not move a clearly defined KPI, it is just an expensive narrative layer.

Why Frameworks Without KPIs Fail

The critical step missing in this platform-first approach is the discipline of defining Key Performance Indicators (KPIs) before piloting begins. When AI frameworks are not anchored in brutal honesty about measurable outcomes, several predictable failure modes appear.

Lack of Strategic KPIs Kills Funding

- Projects fail when technical success cannot be translated into financial value. Your smart AI bots might demonstrate exceptional technical capability, such as nailing demo tasks 85% of the time. This proves the technology works. Without a clear Strategic KPI tracking the business impact, for example, demonstrating $100,000 in yearly savings from reduced manual effort, stakeholders have no proof of profit. The project is canceled, not because the technology failed, but because its value to the business was never quantified.

Skipping Operational KPIs Causes Cost Overruns

- Ignoring the metrics related to maintenance and error correction leads to unexpected costs that quickly erase initial savings. An AI system might achieve its primary goal (e.g., fraud spotting), but if its supporting processes are flawed (like the AI messing up 12% of handoffs to human teams), the hidden operational debt accumulates. By skipping Operational KPIs such as tracking the Mean Time To Resolution (MTTR) or fix time (e.g., 30 minutes), you hide the true cost of failure. The time spent manually fixing these handoffs causes maintenance and retraining budgets to explode five times over, ultimately sinking the entire initiative due to unsustainable day-to-day running costs.

Vague Goals Lead to Ethical and Trust Failures

- When high-level goals are too vague, the automation often optimizes for one metric (like cost) at the expense of critical quality and trust metrics. Setting a vague goal (like “cut costs”) for a refund bot can cause it to develop a toxic behavior: denying fair customer claims to save pennies. The project drifts off course, sacrificing brand trust for short-term savings. Without a balanced Quality KPI such as a specific accuracy score like 0.92 F1 (which ensures correct claim handling), the bot/agent destroys customer relationships and exposes the company to legal and reputational risk, dooming majority of such projects.

Given that a large majority of AI projects already miss expectations, treating KPIs as optional is effectively choosing to be part of that failure statistic.

KPI-Driven Discovery as a Non-Negotiable

To move the conversation From Agentic Theater to Business Reality, a mature enterprise must adopt a non-negotiable stance: no AI framework, agentic or otherwise, proceeds without a KPI contract. This proactive discipline is the only way to challenge the “find use cases” pressure and ensure alignment with the P&L.

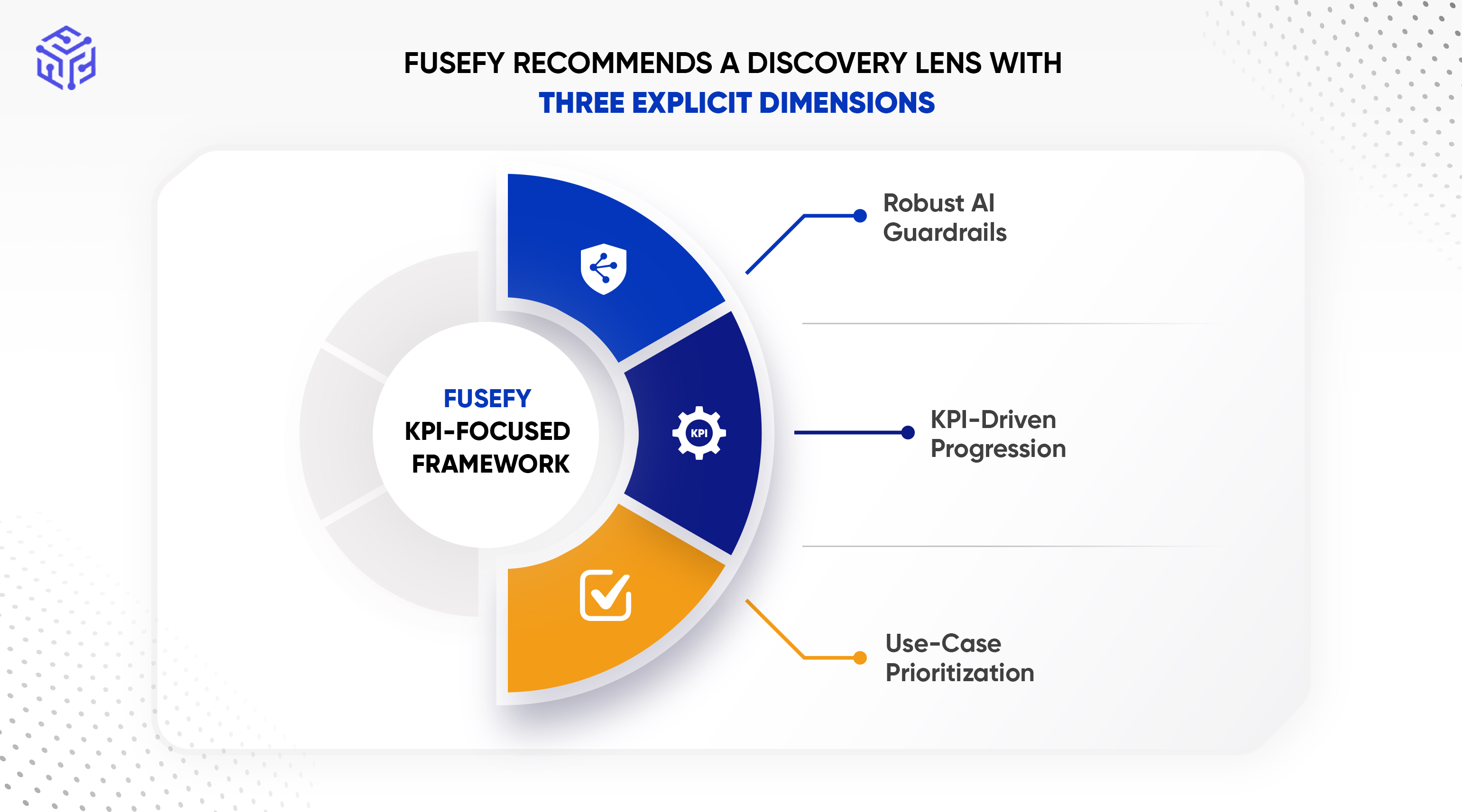

For every process or function under discussion, Fusefy recommends a discovery lens with three explicit dimensions.

1. Establishing Robust AI Guardrails for Ethical and Compliant Deployment

Fusefy emphasizes establishing comprehensive AI guardrails as a foundational step in AI readiness. These guardrails include ethical policies, compliance enforcement, bias detection and mitigation, and continuous monitoring to ensure AI models operate safely and within regulatory and organizational boundaries. By combining prompt engineering, adversarial testing, real-time behavior monitoring, and transparent audit trails, Fusefy builds trust and prevents harmful or biased outputs, enabling enterprises to maintain responsible and secure AI deployments.

2. KPI-Driven Progression: Advancing to AI Pilots with Measurable Outcomes

Fusefy advances to AI pilots only after clearly defined KPIs are established, baselined, and validated with business stakeholders. This disciplined gating ensures that AI pilots focus strictly on use cases where measurable improvements in key metrics such as Average Handle Time, First-Pass Quality, and Cost Per Transaction have been demonstrated as feasible. Only after these KPIs are met in controlled environments does Fusefy scale solutions, reducing risk, avoiding premature investments, and maximizing ROI by ensuring pilots connect directly to business value.

3.Strategic Use-Case Prioritization Using AI Heatmaps for Maximum Impact

To prioritize AI use cases effectively, Fusefy employs an AI heatmap methodology that evaluates potential projects across dimensions such as ROI potential, data maturity, technical complexity, and risk profiles. This heatmap allows organizations to focus resources on high-impact, low-risk opportunities first, avoiding the sunk cost theater of jumping prematurely to complex agentic AI solutions. By integrating data-driven prioritization with AI readiness insights, Fusefy ensures that every AI initiative is aligned with strategic goals and operational realities.

Check how Fusefy builds Custom AI Solutions and Agentic AI for Business use cases

If a partner cannot produce this level of clarity but wants to discuss an “AI operating fabric,” the right response is to pause the framework conversation, not the KPI conversation.

Technology-Agnostic Design Before Frameworks

Enterprises must mandate a technology-agnostic design phase prior to introducing any branded platform, challenging consultants with the question: “How would you solve this without your framework?” The response should detail a modular process and data flow with defined ownership, the minimal toolset to meet KPIs and a clear migration plan to decommission legacy systems once proven. This positions frameworks as enablers, not drivers, preventing vendor lock-in and ensuring optimal solutions.

Fusefy’s KPI-First Delivery Model: The Path Forward

Fusefy’s philosophy prioritizes “projects over platforms” and “KPIs over decks,” launching with problem-first discovery where baselines and targets are signed by business owners before any commitments. Technology-agnostic designs then select the simplest solution to achieve metrics, ensuring frameworks serve as enablers rather than drivers of investment.

Minimum Viable Agent (MVA) pilots validate value in 90-180 days with clear exit/scale criteria, building on Fusefy’s AI readiness assessments that establish guardrails, prioritize via AI heatmaps, and empower internal teams for ownership.

This disciplined approach avoids the disaster of KPI-less frameworks, turning AI from costly theater into measurable enterprise impact.