The Enterprise AI Pilots

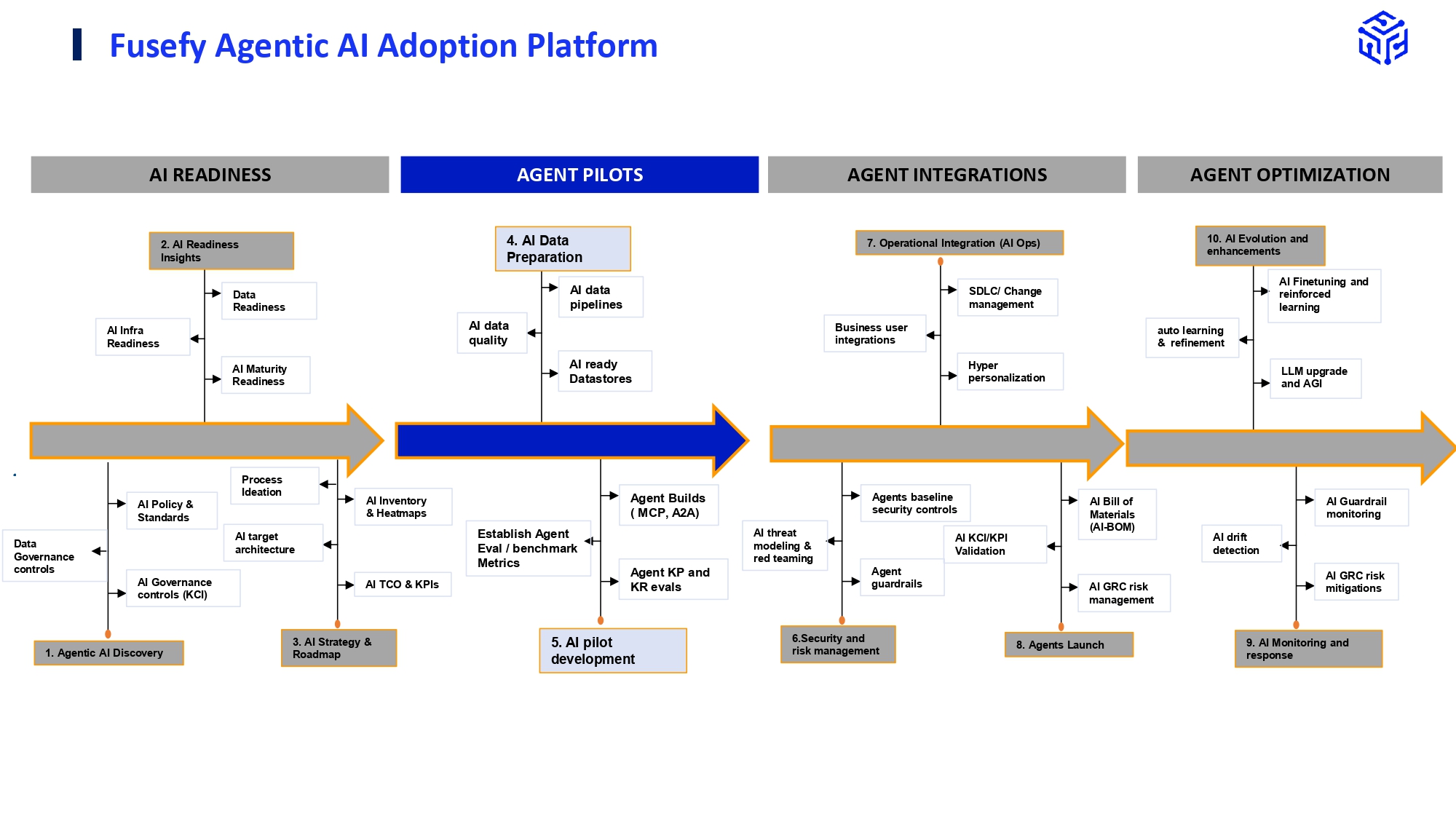

In Level 1, the AI Pilot — we move from blueprint to controlled experimentation. The aim of this stage is not scale but assurance. We don’t just test if the AI works, we test whether it can be trusted to work securely and ethically within governed boundaries. In a healthcare claims environment, that boundary is sacred.

This is the stage where guardrails become active mechanisms and policies become measurable “Key Control Indicators” (KCIs), shaping how AI is built, tested, and evaluated. The outcome of this stage is a Proof of concept (PoC) for Fraud Agent that meets not just performance targets but compliance expectations before it touches real-world data or operations.

Let’s examine how we ensure that the guardrails established during the Readiness stage are effectively upheld before moving AI Pilots into Production.

1. Building the Secure Sandbox (The “Lab”):

Before a developer writes a single line of code, any organization must have a place where experimentation can occur without risk. In healthcare claims, this “AI Lab” is not optional but a governance requirement that ensures every pilot aligns with the principle of medical data confidentiality.

Network Security & Zero Trust

In this stage, developers do not have direct access to the production environment. Instead, they work within a sandbox environment that is fully isolated from production systems. Protected Health Information (PHI) from multiple sources such as patient identifiers, diagnosis codes, provider details, and payment histories, is carefully identified, masked, and provided to developers for piloting purposes only.

The Zero Trust policy ensures there is no automatic trust between network components; every data call and access request from the sandbox to any test server must be authenticated and verified. This strict control model limits exposure and protects sensitive patient and payment data even during AI pilot testing.

Data Privacy

All PHI and sensitive identifiers are identified and masked before being moved into the pilot lab environment to ensure developers only access de-identified data. Data-at-rest, meaning stored data such as masked claim datasets and model checkpoints, is encrypted by default to prevent unauthorized access even if storage is compromised. Data-in-transit, which refers to data moving between developer machines, servers, and monitoring tools, is protected using strong transport-layer encryption protocols like TLS 1.2 or higher. Together, these encryption practices safeguard sensitive data throughout its lifecycle, maintaining compliance with privacy regulations and securing AI pilot activities.

2. Meeting the Minimum KCI: Masked Data

Among all pilot-stage controls, data masking represents the core ethical and operational safeguard. In healthcare claims, data integrity and patient privacy are inseparable. This is where the organization’s data classification policy, defined during the AI Readiness stage, becomes active. The rule is absolute: no Protected Health Information (PHI) is allowed within the pilot environment. Any dataset used for testing the Fraud Agent must be verified as non-sensitive before loading into the sandbox.

Action: Preparing Compliant Test Data

A data engineer creates a controlled dataset containing large datasets of healthcare claims representative of production patterns but fully anonymized through masking scripts. Patient identifiers such as member IDs, provider names, policy numbers, and diagnosis codes are replaced with synthetic surrogates. To maintain analytical realism, the masking process preserves structural relationships; the frequency of certain claim types, the mix of provider specialties, and fraud-related anomalies remain statistically valid.

The result is a dataset that behaves like the real world but contains no traceable human information.

Outcome: Controlled Trust

The Fraud Agent now operates within a zero-risk data environment. It can detect suspicious claims, test precision thresholds, and refine its model parameters without crossing compliance lines. Because every action is logged and governed under the data classification framework, auditors can trace how the dataset was masked, who approved it, and how it was used. This creates a defensible trust trail from data engineer to model artifact.

When the KCI is marked as “Met”, it signals more than technical success. It affirms that ethical AI development is being practiced by design. No patient data was exposed, no privacy rule was compromised, and yet value was proven. This is what Trustworthy AI means in practice.

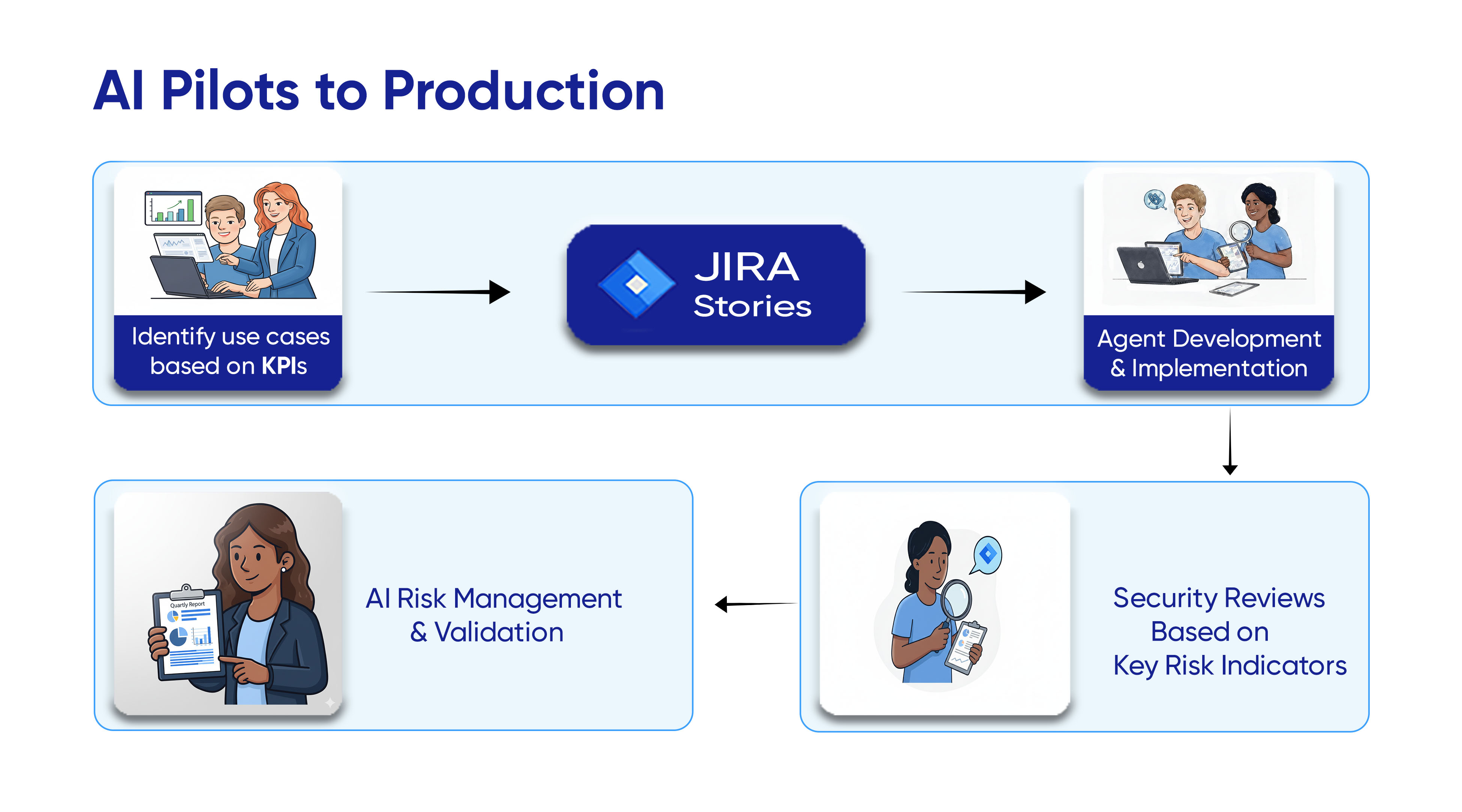

3. Connecting JIRA Stories and Building the Fraud Agent

When the developer receives the Jira story, “Build Fraud Agent POC,” it might appear like a standard development task—but behind that task is a structured governance loop connecting people, process, and platform.

AI Asset Inventory

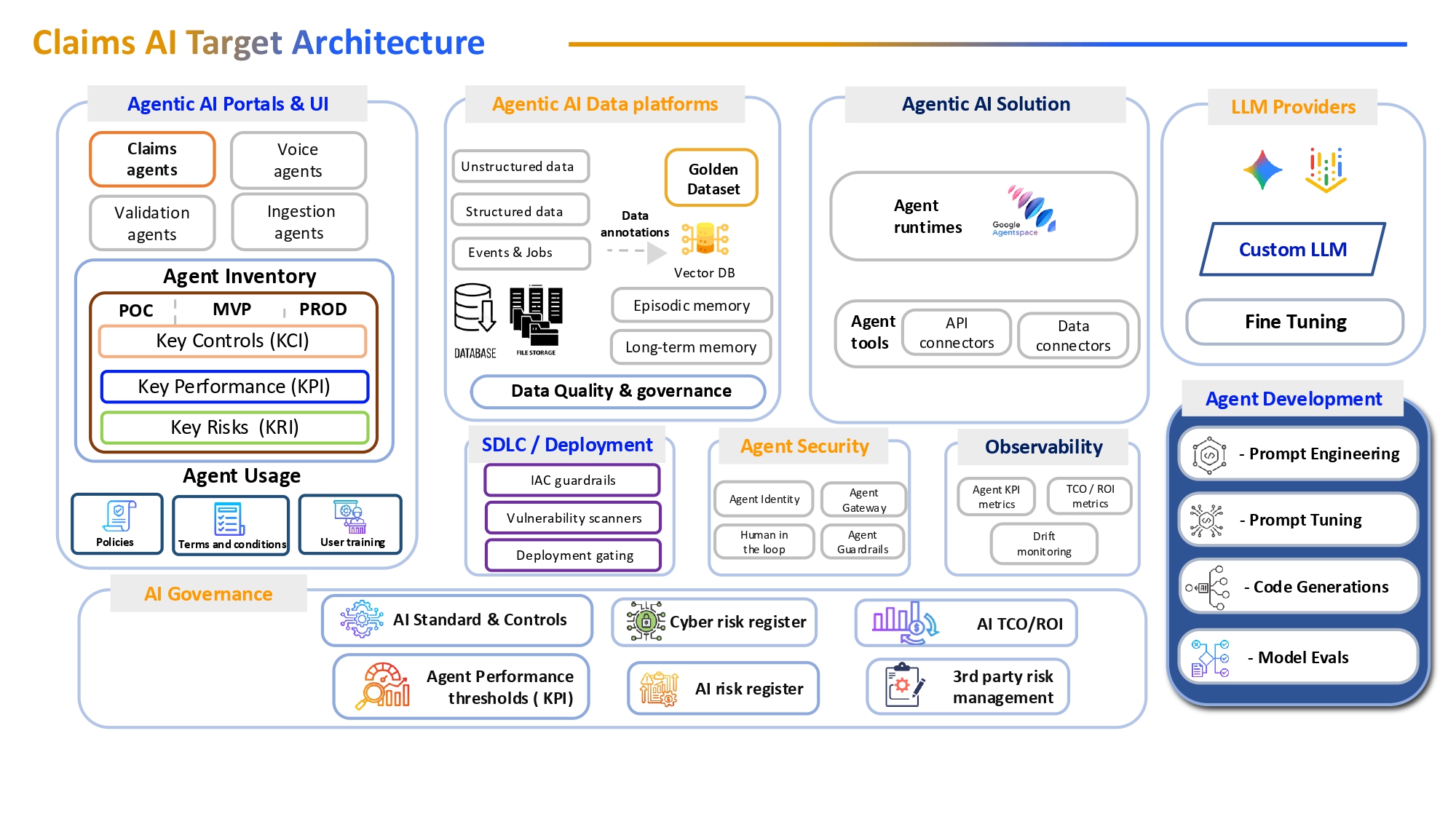

Once a Jira story is created to initiate an AI development task, the system automatically provisions a dedicated developer sandbox environment using the Model Control Platform (MCP). This provisioning event simultaneously registers a new entry in the AI Asset Inventory e.g., FraudAgent-v0.1-pilot ensuring every AI model is traceable from inception.

Metadata such as the developer’s ID, the origin of the dataset (masked claims), and the model’s version history are linked to this inventory record, establishing a comprehensive digital chain of custody throughout the model’s lifecycle.

Next, the developer connects their integrated development environment (IDE), such as Visual Studio, to the sandbox and uses the Agent Development Kit (ADK) to train the Fraud Agent model within the masked data environment. The ADK provides standardized tools and templates that help ensure consistent model creation, reproducibility, and adherence to organizational AI governance and compliance standards.

Throughout this process, all infrastructure events, training logs, and dataset accesses are automatically recorded, making the AI model fully auditable. This means that at any point, auditors can clearly see who trained the model, which data was used, and what controls were in place, all visible within a unified inventory view.

This workflow supports AI development fully within the organization’s compliance perimeter and exemplifies how regulated enterprises like those in healthcare can responsibly scale AI initiatives while maintaining strict control and traceability.

4. Using an LLM as a Judge: Evaluating Trust Through AI

At this point in the pilot, the Fraud Agent has learned to identify potential fraud patterns from the masked claims. But technical promise alone is not enough as trust must be validated. The organization introduces a novel layer of assurance: an independent evaluation model, or what we call the “AI Judge.”

AI Evaluation & Observability

The Judge is an LLM trained to act as an expert claims adjuster, evaluating the Fraud Agent’s predictions on 100 new masked claims. It compares the agent’s detection results with ground truth patterns and established fraud rules, effectively simulating human review at machine scale.

The process achieves two goals:

1. It validates the technical accuracy of the Fraud Agent (precision, recall, and overall detection capability).

2. It operationalizes AI observability: a continuous feedback mechanism that ensures each AI system is auditable and explainable.

This step aligns directly with governance. Evaluation is not an afterthought, but a defined Key Control Indicator (KCI). For the pilot to progress, it must meet predefined success conditions:

KCI Met: Data Classification = True (no PHI used in training or testing)

KCI Met: AI Asset Inventory = Logged (full traceability maintained)

KPI Met: Fraud Detection Precision = 95% (benchmark accuracy requirement)

Once these conditions are met, the pilot may be formally declared complete. The organization now holds evidence-backed assurance that the AI performs securely, ethically, and effectively under controlled circumstances.

The “AI Judge” concept also reinforces the trustworthy AI framework’s recursive nature: AI systems evaluating other AI systems for fairness, safety, and compliance. In a healthcare claims setting, this provides leadership confidence that automation can scale without compromising accountability or patient trust.

The pilot has now proven that AI can perform securely in isolation. Now, we move to Level 2: AI Integration, where the agent connects to live, unmasked PHI.

Stakeholders’ critical questions at the end of this level would be: how does the agent interact with other business systems? How are ongoing risks monitored, and how will continual compliance be achieved at scale? Let’s explore that in our next blog.