Beyond Pilots:

A hands-on

workshop for

building

Secure Agentic AI

with Ashish Rajan

The Blueprint for Trustworthy AI in Healthcare Claims

(AI Readiness)

Before jumping into pilots, we focus on AI Readiness to the foundation of success. This “measure twice, cut once” phase ensures strong governance and compliance. Skipping it is the Primary reason AI projects fail or trigger compliance risks.

Here’s how we build the blueprint for our healthcare claims processing use case by establishing our initial controls.

The Governance Workshop: Before development, we align Legal, Compliance, IT, and Business leaders on governance and risk.

Accountability & Ownership

We formally assign a Business Sponsor and a Technical Owner for the Claims AI initiative.

Governance & Ethical Data Use

Aligned with EU AI Act and HIPAA standards, claims data is used solely for processing and never for profiling or discriminatory purposes, ensuring all usage remains governed, compliant, and legally approved.

Jurisdictional & Regulatory Compliance

We align with HIPAA as our primary regulatory framework, guiding our security, privacy, and governance controls to ensure full compliance from the start.

Risk Register

We establish our AI Risk Register and record the first Key Risk Indicator (KRI)

The Data Governance: Next, we define the data foundation for the AI.

Data Sourcing & Lineage

We use our internal claims database (production) and a third-party fraud detection feed.

Data Classification

All datasets are labeled as PUBLIC, INTERNAL, SENSITIVE, or PHI. The claims database is classified as PHI to trigger strict privacy and handling controls.

Vendor & Third-Party Risk Management

We conduct a vendor risk assessment and review legal contracts to ensure external providers cannot leak, retain, or train on our data.

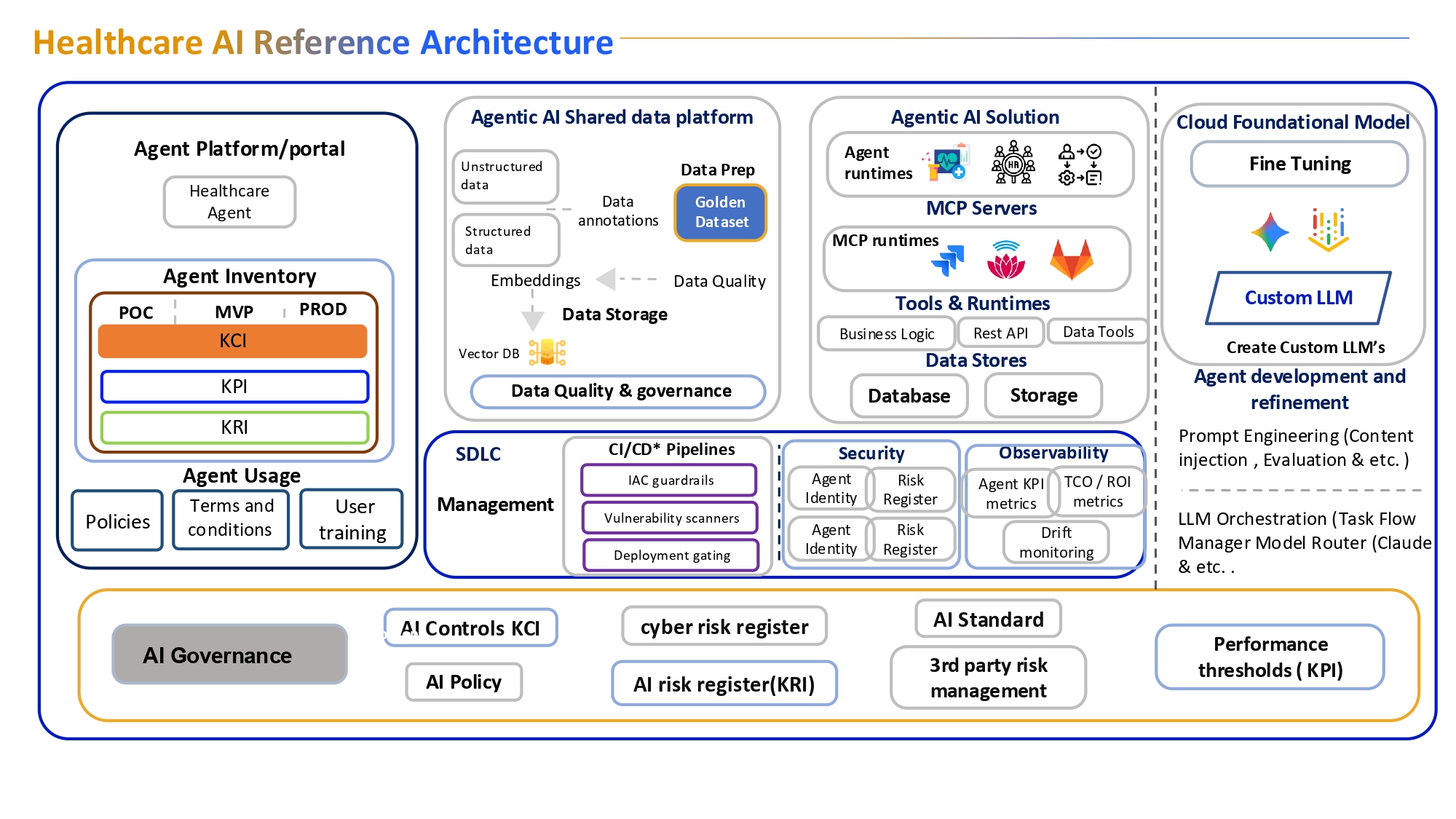

The Target State Architecture: How It Will Work Now, we design the system.

Decompose Usecase: The process “Process a Claim” is broken into a five-agent workflow (Ingest, Validate, Adjudicate, Fraud, MCP).

Agent Identity & Action Attribution

Every agent operates with a unique, auditable identity, ensuring full transparency and traceability across the workflow.

Secure Infrastructure Configuration

We define the production architecture with built-in continuous monitoring, enforcing compliance and data protection at all layers.

Human-in-the-Loop (HITL) & Consent

A dedicated Adjuster Review Dashboard allows human oversight for all flagged claims, maintaining accountability and informed consent.

AI Evaluation & Observability

We define our KCI to ensure all AI operations meet compliance and governance standards, with each control tracked, measured, and validated by the appropriate stakeholders.

The Project Plan: How We Will Build Finally, we translate this blueprint into Jira stories.

Epic: AI-Powered Claims Adjudication.

Story (KCI): As a GRC Lead, create the ‘Prohibited Use’ AI policy and get Legal sign-off.

Story (KCI): As a Data Engineer, define the Data Classification schema for all data stores.

Story (Arch): As an Architect, design the 5-agent workflow for claims processing, including Agent Identity.

Story (Risk): As a Security Lead, create the AI Risk Register in our GRC tool.

This concludes the AI Readiness phase, now we’re ready to build. See you in the next blog!