Discover how Fusefy’s FUSE framework builds trustworthy AI agents with reliable, transparent, and measurable business impact.

Your AI Vendors SOC 2 Certificate & AI Risks

Discover how Fusefy’s FUSE framework builds trustworthy AI agents with reliable, transparent, and measurable business impact.

Not Every Business Process Needs an AI Agent

Discover how Fusefy’s FUSE framework builds trustworthy AI agents with reliable, transparent, and measurable business impact.

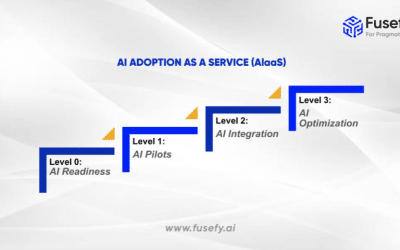

Fusefy- AI Adoption as a Service

Discover how Fusefy’s FUSE framework builds trustworthy AI agents with reliable, transparent, and measurable business impact.

Beyond the Black Box: How to Build Trustworthy Custom AI Solution Agents with FUSE-Driven Evaluation

Discover how Fusefy’s FUSE framework builds trustworthy AI agents with reliable, transparent, and measurable business impact.

Secure, Governed, and Testable by Design: The New Standard in AI Code Generation

Explore the evolving balance between human-in-the-loop systems and fully autonomous AI, and how platforms like Fusefy.ai bridge the gap