Fusefy empowers enterprises to break through top-down FOMO and bottom-up resistance, enabling strategic, people-centered AI adoption.

The Rise of Agentic AI: Microsoft Build & Google I/O Double Down, and How Fusefy Can Help You Build at Scale

Microsoft and Google are embracing Agentic AI. Learn how Fusefy helps you build intelligent agents at scale.

Predicting and Preventing Tenant Churn with Fusefy’s AI Solution

Understand the EU AI Act’s key implications, timelines, and prohibited AI practices. Stay compliant and informed with our expert insights.

AI Hype: Just ‘Silly Old Programs’? Narayana Murthy Weighs In

Narayana Murthy critiques India’s AI hype, calling many so-called AI tools just “silly old programs” disguised as innovation.

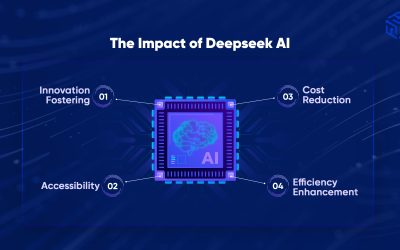

AI Cost Revolution: DeepSeek’s Impact & Fusefy’s Strategy

Understand the EU AI Act’s key implications, timelines, and prohibited AI practices. Stay compliant and informed with our expert insights.

Navigating the EU AI Act: Key Implications, Timelines, and Prohibited AI Practices

Understand the EU AI Act’s key implications, timelines, and prohibited AI practices. Stay compliant and informed with our expert insights.