Discover Fusefy’s step-by-step process to build, deploy, and govern AI agents with speed, compliance, and enterprise-scale efficiency.

Why AI Frameworks without KPIs are a Disaster

Discover Fusefy’s step-by-step process to build, deploy, and govern AI agents with speed, compliance, and enterprise-scale efficiency.

The Ultimate Guide to Building Agentic AI Apps on Google Cloud Platform for Free

Discover Fusefy’s step-by-step process to build, deploy, and govern AI agents with speed, compliance, and enterprise-scale efficiency.

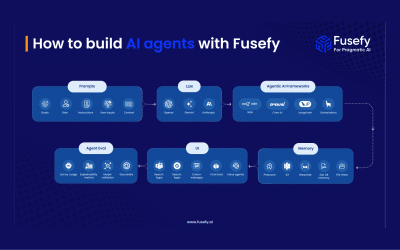

How to Build AI Agents with Fusefy: A Step-by-Step Guide

Discover Fusefy’s step-by-step process to build, deploy, and govern AI agents with speed, compliance, and enterprise-scale efficiency.

Comprehensive Guide to Agentic AI Frameworks: Choosing the Right Tool for Your AI Agent Development

The landscape of Agentic AI frameworks exploded in 2024-2025, offering developers unprecedented choices for building intelligent, autonomous AI systems. This comprehensive guide examines the leading frameworks across three categories: open-source community projects,...

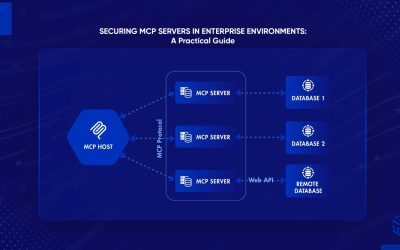

Securing MCP Servers in Enterprise Environments: A Practical Guide

As enterprises accelerate adoption of the Model Context Protocol (MCP) to connect AI models with internal tools and data, securing MCP servers has become a critical concern. With the rapid evolution of MCP clients like Claude Desktop, Cursor, and Windsurf, and the...