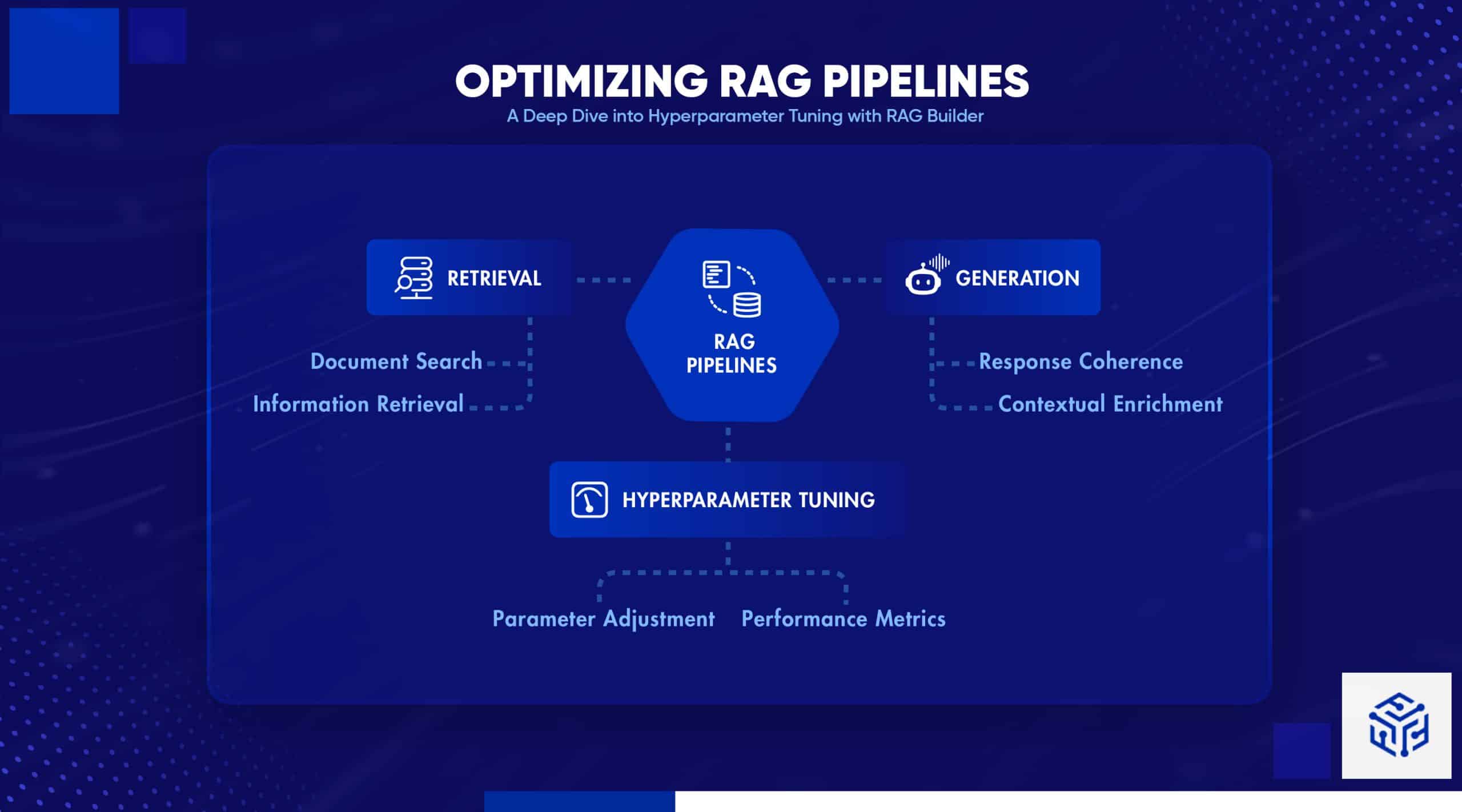

Retrieval-Augmented Generation (RAG) has emerged as a breakthrough method that blends the power of large language models (LLMs) with precise information retrieval. By coupling a retriever that searches through a document corpus with a generator that crafts coherent, contextually enriched responses, RAG pipelines are transforming everything from customer support chatbots to specialized research assistants.

However, the process of building a production-grade RAG system involves juggling many parameters, and that’s where hyperparameter tuning comes into play.

In this blog, we throw light on the key components of a RAG pipeline and discuss in detail the hyperparameters that matter the most. We also cover best practices for fine-tuning these systems and explore real-world use cases where strategic adjustments can have a significant impact.

Understanding the RAG Pipeline

At its core, a RAG pipeline consists of two main components:

-

- Retriever: Searches a large document corpus (often stored as vectors in a vector database) and selects the most relevant passages based on the input query.

- Generator: Feeds both the user’s query and the retrieved context into an LLM to generate a final answer that is both factually grounded and context-aware.

This combination allows RAG systems to overcome one of the major shortcomings of standalone LLMs: hallucination. By anchoring generation in retrieved, external knowledge, RAG models produce responses that are both current and accurate.

Why Hyperparameter Tuning Matters in RAG

Each stage of the RAG pipeline comes with its own set of parameters. Manually testing every configuration isn’t practical given the complexity, and even a modest system might have hundreds or thousands of possible settings. Automated hyperparameter tuning, using techniques such as Bayesian optimization, can quickly identify the best configuration for your specific dataset and use case. Not only does tuning help boost retrieval accuracy, but it can also improve latency and reduce compute costs.

Key Hyperparameters and Their Use Cases

1. Chunking Strategies and Chunk Size

What It Affects:

-

- Retrieval Precision: How well a document is split into meaningful segments.

- Processing Efficiency: Larger chunks mean fewer retrieval calls but might increase computational overhead.

Use Cases:

-

- FAQ Chatbots or Short Queries: Smaller chunk sizes (e.g., 500–1000 characters).

- Document Summarization: Larger chunks (e.g., 1500–2000 characters).

- Highly Structured Data: Use specialized splitters like MarkdownHeaderTextSplitter.

2. Embedding Models

What It Affects:

-

- Semantic Matching: The quality of document embeddings for matching query semantics.

Use Cases:

-

- General-Purpose Applications: Use models like OpenAI’s text-embedding-ada-002.

- Domain-Specific Applications: Use fine-tuned or domain-specific models for better performance.

3. Retrieval Methods

What It Affects:

-

- Speed vs. Relevance Trade-Off: Choice of retrieval algorithm impacts performance.

- Hybrid Search: Combines dense vector and keyword search (e.g., BM25).

Use Cases:

-

- High-Recall Scenarios: Use vector similarity or hybrid search.

- Keyword-Sensitive Applications: Use BM25 for exact matches.

4. Re-ranking Techniques

What It Affects:

-

- Result Precision: Refines initial retrieval results.

- Latency: Can be high with sophisticated models like Cross Encoders.

Use Cases:

-

- Complex Queries with Ambiguity: Use re-ranking models like BAAI/bge-reranker-base.

- Latency-Sensitive Deployments: Limit re-ranking to top candidates.

5. Prompt Templates for LLM Responses

What It Affects:

-

- Response Quality: Prompt structure impacts relevance and clarity.

- Flexibility vs. Strictness: Rigid prompts restrict creativity, flexible ones allow exploration.

Use Cases:

-

- Fact-Grounded Answers: Use templates focusing on retrieved context only.

- Creative or Exploratory Tasks: Use open-ended prompt templates.

6. Optimization Settings

What It Affects:

-

- Tuning Efficiency: Affects how quickly optimal configurations are found.

- Resource Management: Balances tuning efforts with available resources.

Use Cases:

-

- Limited Compute Environments: Run fewer, focused trials.

- High-Stakes Applications: Invest in more trials for maximum performance.

Automating Hyperparameter Tuning with RAGBuilder

Given the complex interplay of these parameters, tools like RAGBuilder are invaluable. RAGBuilder automates the process of hyperparameter tuning using Bayesian optimization. With pre-defined RAG templates and custom configurations, RAGBuilder can:

-

- Persist and reload optimized pipelines.

- Provide access to vector stores, retrievers, and generators.

- Easily deploy your tuned pipeline as an API service.

This kind of tool dramatically reduces manual trial-and-error, allowing you to focus on refining use cases rather than tuning each parameter by hand.

Optimizing RAG Pipelines: Best Practices and Final Insights

Summing it up, tuning a RAG pipeline requires a systematic, data-driven approach. Here are some best practices:

-

- Start Simple: Use default configurations and scale complexity gradually.

- Test Data Quality: Use domain-specific queries and metrics.

- Monitor Resources: Track memory and latency during optimization.

- Iterate Gradually: Change one parameter at a time.

- Save and Reuse Configurations: Ensure consistency and efficient redeployment.

In conclusion, optimizing a RAG pipeline isn’t a one-size-fits-all task. Fine-tuning hyperparameters through chunking strategies, embedding models, retrieval methods, and prompt templates can significantly boost both efficiency and accuracy. Tools like RAGBuilder further streamline the process, making it easier to deploy reliable, high-performing RAG systems.

Looking Beyond Hyperparameter Tuning

Hyperparameter tuning is just one part of optimizing RAG. Even well-tuned pipelines can fall short if the underlying data quality is poor. That’s where data labeling comes in. While prompt engineering can enhance responses, it cannot make up for poorly structured or mislabeled data.

To learn more, check out our blog on data labeling for effective RAG-based AI.

AUTHOR

Sindhiya Selvaraj

With over a decade of experience, Sindhiya Selvaraj is the Chief Architect at Fusefy, leading the design of secure, scalable AI systems grounded in governance, ethics, and regulatory compliance.